library(mall)Text, media, and large language models

When people interact and communicate with each other in the political, social, or economic spheres, they often use written text. Politicians and political parties, for example, use election manifestos and campaign flyers to communicate with potential voters, and these have long been used to measure their ideological positions (Volkens et al. 2014; Laver, Benoit, and Garry 2003; Slapin and Proksch 2008). Newspapers, secondly, routinely report on economic developments, and the underlying sentiment or “tone” of these reportings can be used to get a sense of how the economy overall is developing (Ozgun and Broekel 2021). And, finally, a lot of social interaction now happens online on social media sites, where users post and engage with others’ posts. All of this taken together provides a vast amount of data (e.g., Hilbert and López 2011) that can be used to study and explain political, social, and economic behavior.

The catch, however, is that someone needs to analyze all this data. According to one estimate, Twitter (now X) alone produces somehwere between 500’000 and almost 5’000’000 tweets on a single day (Ulizko et al. 2021), and even a fraction of this is much more information than any individual human can process in a reasonable amount of time. Plus, the sheer amount of text data that is now available is only one of the problems one runs into. Another one is that people obviously communicate in many different languages – English, French, Chinese, German, Spanish, Russian, and so on – and most individuals are only capable of reading and analyzing a small number of languages.

Qualitative methods for analyzing text definitely run into limits here, and so do older quantitative methods like classifiers, topic models, or ideological scaling because they also require either a set of human-classified texts as training data or produce results that are not always straightforward to interpret (Grimmer and Stewart 2013; Gentzkow, Kelly, and Taddy 2019; see also Molina and Garip 2019).

This is where large language models (LLMs) make a big difference. As anyone who has ever tried out tools like ChatGPT or Copilot (so, everyone?) knows, LLMs are “smart” enough to process – meaning classify, translate, summarize, or check – even longer text passages according to specific criteria or demands and produce results in a format that human users can explicitly specify. Their big disadvantage is that they, as the name indicates, are large and require serious amounts of computational firepower to perform complex operations on large amounts of text.

However, if one works with reasonably small amounts of short segments of text such as tweets and wants to get only relatively simple operations done – such as identifying the main topic – it is possible to use LLMs for text analysis on a regular laptop, no need for massive GPU-powered server farms. First, ollama, an open-source framework for LLMs (see https://github.com/ollama/ollama) makes it possible to run smaller LLMs one’s own laptop, for free. In addition, the mall package for R (https://mlverse.github.io/mall/) makes it possible to use ollama LLMs directly within R on a given dataset, for example to translate, classify, or otherwise “evaluate” a sequence of texts that is stored in a variable (“vector”).

The rest of this post shows how you can put this into action with an example analysis of tweets on immigration by French right and radical right politicians (Pietrandrea and Battaglia 2022).

LLMs and ollama

The LLM zoo

The probably most widely-known family of LLMs is the GPT series developed by OpenAI, which are what is running under ChatGPTs “hood” – but they are by far not the only ones. Nowadays, there is a proper zoo of LLMs. Some of these models are proprietary – meaning you have to pay to be able to use them – but there are also many others which are open-source and free to use for anyone. The Llama (or LLaMa) family of LLMs that was developed by Meta (the company that owns Facebook and Instagram) is one example (Touvron et al. 2023).

LLMs also differ in size and there are generally always larger and smaller versions of a given LLM. The llama3.2 model, for example, is available in 1B and 3B versions, meaning one contains 1 billion parameters and the other 3 billion. Both versions of llama3.2, in turn, are significantly smaller than the llama3.1 models, which contain 8B, 70B, or 405B parameters. Smaller models are generally not as smart as larger models, but they also require less computing power. Therefore, if you can do a certain task with a smaller model without sacrificing (too much) quality, then that is usually worth doing. (Remember the stuff on “parsimony” from your methods course? This is what that was about.)

Installing ollama

ollama was created to make it easier to access all the various LLMs from their different providers. Simply put, ollama is a little program that allows you to download and run any of the long list of open-source models they have available (see https://ollama.com/search) with a few lines of code.

You can download and install ollama directly from their website: https://ollama.com/download.

Using ollama

There are two ways in which you can use ollama.

The first one is to use the ChatGPT-like chat-window that ollama comes with. Here, you choose one of the available models and then interact with it just like you would with ChatGPT or Copilot.1

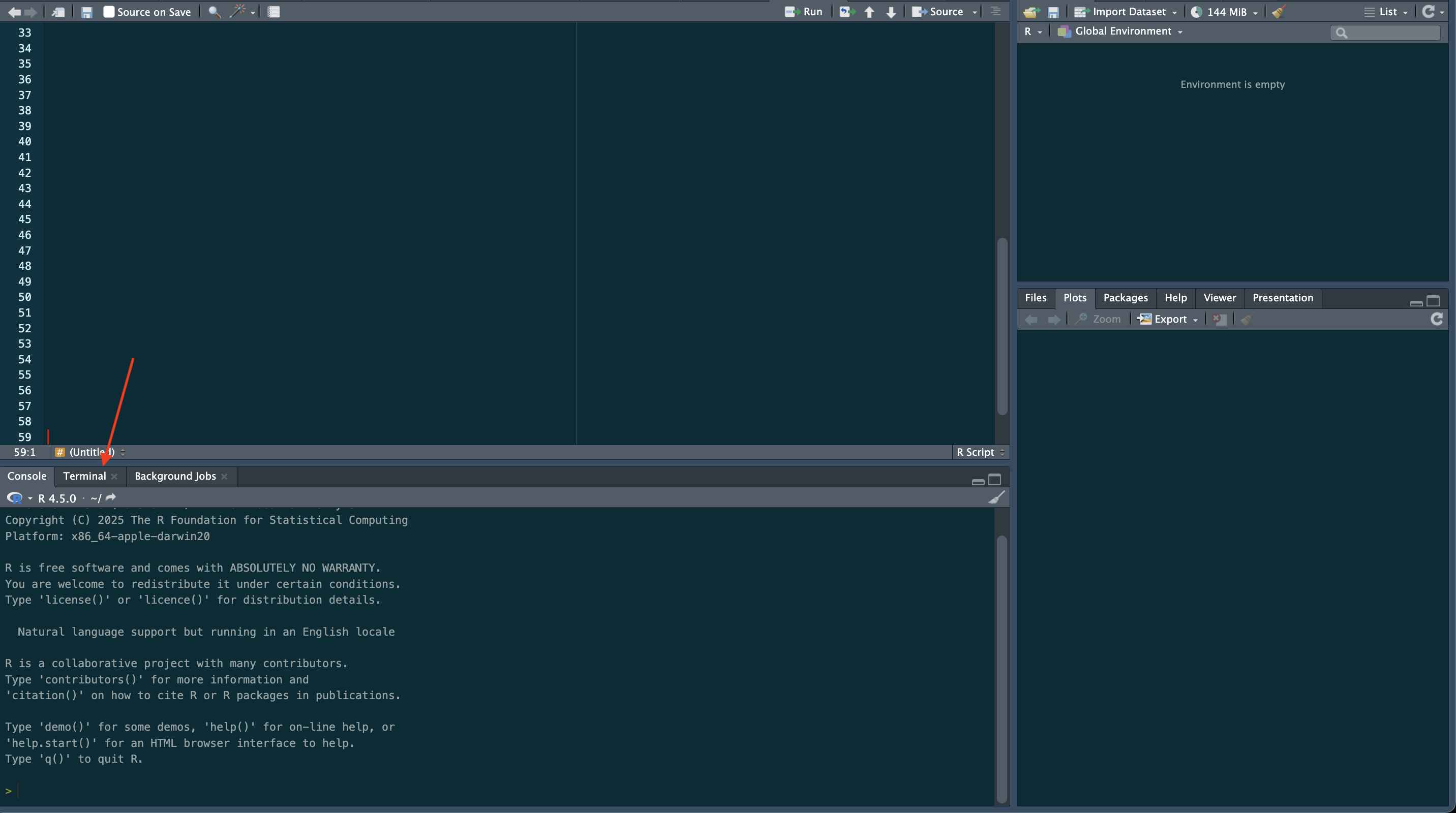

The second and “native” way of accessing ollama is via the Terminal on Mac or the Command-Line Interface (CLI) on Windows. You can access them directly from within RStudio if you open the Terminal tab on the bottom (next to the Console tab; see Figure 1 below). When you do that, you should see another blinking cursor waiting for commands to execute. This works just like R – you type in a command, hit enter, and the computer does what you asked (or returns an error message) – only that you work with different programs and thus different languages.2

When you have navigated to the Terminal/CLI, you can start working with ollama. For example, to see which models you have currently installed on your computer, you would run in your Terminal or CLI (not in R):

ollama listMost likely, you will see no models listed since you haven’t installed any models yet.

To install a model, you use ollama pull <model>. For example, to install the llama3.1 model, you would run:

ollama pull llama3.1ollama will then go and download the requested model – which will usually take a bit of time. Again, we are talking about large models here!

Once that is done, you can start chatting with your new model! To run a model, you use ollama run <model> – so in our case:

ollama run llama3.1After a few moments, the command line will change and you will see:

>>>> Send a message (/? for help)This is now basically a stripped-down version of ChatGPT, and it works the same way. You type in a message or request, hit enter, and the model gives you answer to the best of its ability.

For example, see what happens when you ask the model to “Write something funny.”:

>>>> Write something funny.If you want to stop the model, you can do so with /bye and then enter:

>>>> /byeYou will then get back to the standard command line interface, where you can install and run other LLMs.

Running ollama from within R with mall

With a simple chat interface – a window like with ChatGPT or the ollama interface in the command line, we can already give our LLM some task to do. We could for example feed it a single tweet and then ask it to classify or translate it for us. But this only works when we work with a handful of tweets or other small pieces of text. If we are working with a large dataset of tweets or similar text, this approach is usually not feasible!

A much more convenient way to use an ollama LLM is to use it programmatically: to store all the different texts we want to analyze in a dataset, import that dataset into R, and then call the LLM from witin R to let it automatically go through then entire dataset, work with all of the texts, one after the other, and then store the result – e.g., a translation or classification – as a new variable in the dataset. This is, in essence, what the mall package for R does.

You can install mall directly from CRAN with install.packages() – in R! Once you have done that, you load it with library().

mall can use both local LLMs that are installed on your computer via ollama and remote LLMs like ChatGPT, but in the latter case only if you have access to their API (which is generally a service you need to pay for).

For this analysis, we use local LLMs via ollama.

To be able to use ollama from within R, ollama needs to be running in the background. To set this, you just need to go back to the Terminal or CLI and run ollama serve. You will then get a bit of computer gibberish and the Terminal will be busy – basically, ollama is up and running and ready to serve you a model of your choice. You can then move back to R.

Next, we specify which LLM we want to run with the llm_use() function from mall. In our case, we only have the llama3.1 model installed, so we run that one:

llm_use("ollama","llama3.1",

seed = 42)── mall session object Backend: ollama

LLM session:

model:llama3.1

seed:42

R session:

cache_folder:/var/folders/9x/9t19vvdn5pv84n6k_9lwqqm40000gn/T//RtmpXjkX1a/_mall_cache651363608aacOnce this is taken care of, we are all set and can do our analysis.3

Example analysis: French politicians’ tweets about migration

To illustrate how one can analyze text data with mall and ollama, we will work with a dataset of tweets on migration from various French right and radical right politicans between 2011 and 2022 that were collected for the MIGR-TWIT project (see https://doi.org/10.5281/zenodo.7257708). Tweets are a good place to start when it comes to text analysis and LLMs because they are short, so computing power and processing time are less of an issue.

Before we import the dataset, we quickly load the tidyverse to be able to use it for data management:

library(tidyverse)── Attaching core tidyverse packages ──────────────────────── tidyverse 2.0.0 ──

✔ dplyr 1.1.4 ✔ readr 2.1.5

✔ forcats 1.0.0 ✔ stringr 1.5.1

✔ ggplot2 3.5.2 ✔ tibble 3.2.1

✔ lubridate 1.9.4 ✔ tidyr 1.3.1

✔ purrr 1.0.4

── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

✖ dplyr::filter() masks stats::filter()

✖ dplyr::lag() masks stats::lag()

ℹ Use the conflicted package (<http://conflicted.r-lib.org/>) to force all conflicts to become errorsData import

You can import the dataset directly from the Zenodo data archive:

tweets <- read.csv("https://zenodo.org/records/7347479/files/FR-R-MIGR-TWIT-2011-2022_meta.csv?download=1", sep = ";")You see directly in the Environment that the dataset contains around 40’000 tweets. We can also do a quick inspection with glimpse() to get a sense of what the variables look like:

glimpse(tweets)Rows: 40,112

Columns: 44

$ data__id <chr> "82713564338597888", "433669…

$ data__text <chr> "Dans cette vidéo, je dénonç…

$ data__lang <chr> "fr", "fr", "data__lang", "f…

$ data__created_at <chr> "2011-06-20T07:37:05.000Z", …

$ author__username <chr> "@dupontaignan", "@dupontaig…

$ data__author_id <chr> "38170599", "38170599", "dat…

$ data__conversation_id <chr> "82713564338597888", "433669…

$ data__public_metrics__retweet_count <chr> "2", "1", "data__public_metr…

$ data__public_metrics__reply_count <chr> "1", "0", "data__public_metr…

$ data__public_metrics__like_count <chr> "2", "0", "data__public_metr…

$ data__public_metrics__quote_count <chr> "0", "0", "data__public_metr…

$ data__reply_settings <chr> "everyone", "everyone", "dat…

$ data__possibly_sensitive <chr> "False", "False", "data__pos…

$ data__source <chr> "Twitter Web Client", "Twitt…

$ data__geo__place_id <chr> "", "", "data__geo__place_id…

$ data__referenced_tweets__type <chr> "", "", "data__referenced_tw…

$ data__referenced_tweets__id <chr> "", "", "data__referenced_tw…

$ data__in_reply_to_user_id <chr> "", "", "data__in_reply_to_u…

$ data__entities__hashtags__start <chr> "", "", "data__entities__has…

$ data__entities__hashtags__end <chr> "", "", "data__entities__has…

$ data__entities__hashtags__tag <chr> "", "", "data__entities__has…

$ data__entities__mentions__start <chr> "", "", "data__entities__men…

$ data__entities__mentions__end <chr> "", "", "data__entities__men…

$ data__entities__mentions__username <chr> "", "", "data__entities__men…

$ data__entities__mentions__username.1 <chr> "", "", "data__entities__men…

$ data__entities__mentions__id <chr> "", "", "data__entities__men…

$ data__entities__urls__start <chr> "86", "", "data__entities__u…

$ data__entities__urls__end <chr> "105", "", "data__entities__…

$ data__entities__urls__url <chr> "http://t.co/J8ipeOY", "", "…

$ data__entities__urls__expanded_url <chr> "http://dai.ly/geL6Zm", "", …

$ data__entities__urls__display_url <chr> "dai.ly/geL6Zm", "", "data__…

$ data__entities__urls__status <chr> "200", "", "data__entities__…

$ data__entities__urls__unwound_url <chr> "https://www.dailymotion.com…

$ data__context_annotations__. <chr> "", "", "data__context_annot…

$ data__context_annotations__.__id <chr> "", "", "data__context_annot…

$ data__context_annotations__.__name <chr> "", "", "data__context_annot…

$ data__context_annotations__.__description <chr> "", "", "data__context_annot…

$ data__attachments__media_keys__001 <chr> "", "", "data__attachments__…

$ data__attachments__media_keys__002 <chr> "", "", "data__attachments__…

$ data__attachments__media_keys__003 <chr> "", "", "data__attachments__…

$ data__attachments__media_keys__004 <chr> "", "", "data__attachments__…

$ meta__newest_id <chr> "82713564338597888", "", "me…

$ meta__oldest_id <chr> "43366943381651456", "", "me…

$ meta__result_count <chr> "2", "", "meta__result_count…Note that the actual tweets – the “meat part” of the dataset – are stored in the data__text variable, and that each tweet has a unique data__id. We also have information about which politician sent out the tweet and the exact time they did so, in addition to a range of other contextual variables.

Data cleaning

Let’s have a look at the first ten tweets:

tweets |>

slice_head(n = 10) |>

select(data__text) data__text

1 Dans cette vidéo, je dénonçais l'arnaque des bons sentiments en matière d'immigration http://t.co/J8ipeOY

2 Je démonte les idées reçues sur l'immigration http://dai.ly/geL6Zm

3 data__text

4 A 15H aux questions d'actualités, j'interogerai M. Guéant sur les faits de délinquance liés à l'immigration clandestine venue de Tunisie

5 data__text

6 Nicolas Bay invité de « Objectif Elysée » en débat sur l’immigration | Front National: http://t.co/Pd7dHD3a

7 Guéant reconnaît le bilan dramatique de la politique d’immigration de #Sarkozy | Front National: http://t.co/uvLXTLre

8 Mandat de Nicolas #Sarkozy : une explosion de la fraude sociale liée à une explosion de l’immigration | Front National: http://t.co/ycJInrbH

9 Départ de Maxime Tandonnet : Nicolas #Sarkozy se révèle en écartant de l Elysée son seul conseiller anti-immigration !: http://t.co/uGt5Dmk

10 Marine Le Pen s'adresse aux policiers, gendarmes et douaniers de France,sur la lutte contre l'immigration clandestine: http://t.co/OoUxIjsIt turns out that there are some irrelevant rows containing only the variable name (data__text).

These rows need to be filtered out, and (just to make sure) we also filter out any observation where the data__id variable is empty:

tweets |>

filter(data__id != "data__id" & data__id!="") -> tweetsIn addition, you might have noticed that the most of the tweets contain links and hashtags, and one also includes an @-symbol. The links themselves are not really relevant now, so it might be best to remove them, and we can do the same with the @ and # symbols.

To do that across all the tweets in one go, we use something called a regular expression (or “regex”). Simply put, a regular expression is a way to tell R (or other programming languages) to look for patterns in a given text and then do something with those pieces of text that correspond to a given pattern (e.g., delete or change).

Writing good regular expressions is a science in and of itself (see Wickham and Grolemund 2016, chap. 14.3) and too complicated to go into detail here. Fortunately, AI chatbots like ChatGPT can help with writing them, and there is a website (https://regex101.com/) that you can use to check that the expression you got from them actually does what it is supposed to do.

Copilot suggested the following regular expression to filter out all @ and # symbols and links starting with “http”: @|#|http?://\\S+. This expression (very simply put) tells R to look for the @-symbol or (|) the #-symbol or (|) a piece of text starting with “http” or “https” and followed first by “://” and then any sequence of characters that are not white space (\S+).

We use this expression in the str_remove_all() function to remove these parts of each tweet and store the cleaned tweets as a new variable (clean_text):

tweets |>

mutate(clean_text = str_remove_all(data__text, "@|#|http?://\\S+")) -> tweetsIf we then try again, we get the first ten tweets:

tweets |>

slice_head(n = 10) |>

select(clean_text) clean_text

1 Dans cette vidéo, je dénonçais l'arnaque des bons sentiments en matière d'immigration

2 Je démonte les idées reçues sur l'immigration

3 A 15H aux questions d'actualités, j'interogerai M. Guéant sur les faits de délinquance liés à l'immigration clandestine venue de Tunisie

4 Nicolas Bay invité de « Objectif Elysée » en débat sur l’immigration | Front National:

5 Guéant reconnaît le bilan dramatique de la politique d’immigration de Sarkozy | Front National:

6 Mandat de Nicolas Sarkozy : une explosion de la fraude sociale liée à une explosion de l’immigration | Front National:

7 Départ de Maxime Tandonnet : Nicolas Sarkozy se révèle en écartant de l Elysée son seul conseiller anti-immigration !:

8 Marine Le Pen s'adresse aux policiers, gendarmes et douaniers de France,sur la lutte contre l'immigration clandestine:

9 RT laprovence: Un élu quitte ses fonctions à l'UMP pour protester contre la "frilosité" de son parti. UMP rebélion immigration Luca

10 Guéant : des mots contre l’immigration de travail mais des actes pour la favoriser comme jamais ! fn2012Looks like everything worked.

Translating tweets to English

The tweets may now be “clean” but they are still in French, which is not a language everyone is familiar with.

To get a better sense of what the tweets are about, we can use the llm_translate() function from the mall package to translate them into English. To use this function, we specify which variable we want translated and the language we want it translated into, and the function will then let the LLM we loaded earlier (llama3.1) go through the dataset and translate the individual entries.

Let’s try it out by translating the first ten tweets into English (translating all of them would take way too much time). To limit the operation to only the first ten, we use the slice_head() function. We also record the start and finish time so that we can see how long the process takes:

start <- Sys.time()

tweets |>

slice_head(n = 10) |>

select(clean_text) |>

llm_translate(clean_text,

language = "english") -> translated

end <- Sys.time()

diff <- end-startThis will then take a few moments! In my case, it takes exactly:

diffTime difference of 1.134099 minsThis is actually not too bad – a human translator would most likely have needed a bit more time to do this job.

Let’s look at the translations, which are automatically stored in a new variable called .translation:

translated |>

select(.translation) .translation

1 I'm exposing the scam of good intentions when it comes to immigration.

2 Challenging common misconceptions about immigration.

3 I will ask Mr. Guéant about the facts related to delinquency linked to clandestine immigration from Tunisia at 15H as part of current events questioning.

4 In this show on immigration, Nicolas Bay from the Front National Party discusses French integration policies and their effects on society.

5 Claudie Haigneré and Jean-Pierre Chevènement were already warning about it in 2007.

6 Nicolas Sarkozy's mandate has seen a social fraud explosion linked to an immigration explosion.

7 Depart of Maxime Tandonnet: Nicolas Sarkozy reveals himself by ejecting from the Elysee his sole immigration advisor.

8 French National Front leader Marine Le Pen addresses police officers, gendarmes and customs officials on the fight against clandestine immigration.

9 A UMP elected official has resigned to protest his party's lack of engagement on immigration issues.

10 "Guéant speaks against immigration for work, but his actions promote it more than ever."The fact that the tweets were originally written in French still shows, but the translation worked overall quite well – and non-francophones can now make sense of the tweets. We can see that one tweet is about police officers, another is about “social fraud”, and quite a few of them are about Nicolas Sarkozy, the former French President.

Identifying tweets with a specific content

In a real-life analysis, we may be interested in finding out how often politicians (or others) talk or tweet about a certain topic. To answer this question, we can go through our database of tweets and identify all tweets that refer to some keyword or topic of interest.

To do that, we can use the llm_verify() function from mall. This function works very similarly to llm_translate(): we need to specify which variable we want to be checked by the LLM and some criterion that we want to be used.

For example, let’s ask the LLM to identify all tweets that are related to social fraud. To do that, we simply write out the request and we also specify which name the new variable should have (pred_name = "socialfraud"). We save the result in a new data.frame called checked:

start <- Sys.time()

tweets %>%

select(clean_text) %>%

slice_head(n = 10) %>%

llm_verify(clean_text, "does this tweet mention social fraud?",

pred_name = "socialfraud") -> checked

end <- Sys.time()

diff <- end-start

diffTime difference of 33.21647 secsThis took less than a minute.

Let’s see what the results look like:

checked |>

select(clean_text,socialfraud) clean_text

1 Dans cette vidéo, je dénonçais l'arnaque des bons sentiments en matière d'immigration

2 Je démonte les idées reçues sur l'immigration

3 A 15H aux questions d'actualités, j'interogerai M. Guéant sur les faits de délinquance liés à l'immigration clandestine venue de Tunisie

4 Nicolas Bay invité de « Objectif Elysée » en débat sur l’immigration | Front National:

5 Guéant reconnaît le bilan dramatique de la politique d’immigration de Sarkozy | Front National:

6 Mandat de Nicolas Sarkozy : une explosion de la fraude sociale liée à une explosion de l’immigration | Front National:

7 Départ de Maxime Tandonnet : Nicolas Sarkozy se révèle en écartant de l Elysée son seul conseiller anti-immigration !:

8 Marine Le Pen s'adresse aux policiers, gendarmes et douaniers de France,sur la lutte contre l'immigration clandestine:

9 RT laprovence: Un élu quitte ses fonctions à l'UMP pour protester contre la "frilosité" de son parti. UMP rebélion immigration Luca

10 Guéant : des mots contre l’immigration de travail mais des actes pour la favoriser comme jamais ! fn2012

socialfraud

1 0

2 0

3 0

4 0

5 0

6 1

7 0

8 0

9 0

10 0We know already that only tweet number 6 was about social fraud and that is also what the model found. This is good because this gives us confidence that when we scale the operation up – let it run on the entire dataset – we should get correct results.4

We can also see if the LLM can identify if tweets are about specific politicians. Let’s for example try to identify tweets that are in some way about Marine Le Pen, the leader of the Rasssemblement National (RN), France’s main radical right-party:

start <- Sys.time()

tweets %>%

select(clean_text) %>%

slice_head(n = 10) %>%

llm_verify(clean_text, "does this tweet mention Marine Le Pen?",

pred_name = "lepen") -> checked

end <- Sys.time()

diff <- end-start

diffTime difference of 31.03894 secsIf we check the new result, we can see that the model did correctly identify the one tweet that was about Marine Le Pen:

checked |>

select(clean_text,lepen) clean_text

1 Dans cette vidéo, je dénonçais l'arnaque des bons sentiments en matière d'immigration

2 Je démonte les idées reçues sur l'immigration

3 A 15H aux questions d'actualités, j'interogerai M. Guéant sur les faits de délinquance liés à l'immigration clandestine venue de Tunisie

4 Nicolas Bay invité de « Objectif Elysée » en débat sur l’immigration | Front National:

5 Guéant reconnaît le bilan dramatique de la politique d’immigration de Sarkozy | Front National:

6 Mandat de Nicolas Sarkozy : une explosion de la fraude sociale liée à une explosion de l’immigration | Front National:

7 Départ de Maxime Tandonnet : Nicolas Sarkozy se révèle en écartant de l Elysée son seul conseiller anti-immigration !:

8 Marine Le Pen s'adresse aux policiers, gendarmes et douaniers de France,sur la lutte contre l'immigration clandestine:

9 RT laprovence: Un élu quitte ses fonctions à l'UMP pour protester contre la "frilosité" de son parti. UMP rebélion immigration Luca

10 Guéant : des mots contre l’immigration de travail mais des actes pour la favoriser comme jamais ! fn2012

lepen

1 0

2 0

3 0

4 0

5 0

6 0

7 0

8 1

9 0

10 0Again, the model seems to perform well enough that we could let it run on the entire dataset and still be reasonably confident that we get useable results.

Extracting information

Sometimes we want to not only know if a given piece of text contains something we are interested. Instead, we want to extract that particular text element and use it for a further analysis (e.g., a word cloud). To do that, we can use the llm_extract() function. Here, we again name the variable that we want examined and the piece of information that the model is supposed to look for and extract, and we can also specify a name for the new variable that will contain the extracted text elements.

For example, let’s try to extract the French politicians that are named in the first ten tweets:

start <- Sys.time()

tweets %>%

select(clean_text) %>%

slice_head(n = 10) %>%

llm_extract(clean_text, "French politician",

pred_name = "politician") -> extracted

end <- Sys.time()

diff <- end-start

diffTime difference of 35.08848 secsThis did not take very long, and a human coder would probably need longer just to be able to write down the names of the politicians.

However, the results are not fully convincing: it seems the model hallucinated and stated a name even when the tweet did not contain actual names:

extracted |>

select(clean_text, politician) clean_text

1 Dans cette vidéo, je dénonçais l'arnaque des bons sentiments en matière d'immigration

2 Je démonte les idées reçues sur l'immigration

3 A 15H aux questions d'actualités, j'interogerai M. Guéant sur les faits de délinquance liés à l'immigration clandestine venue de Tunisie

4 Nicolas Bay invité de « Objectif Elysée » en débat sur l’immigration | Front National:

5 Guéant reconnaît le bilan dramatique de la politique d’immigration de Sarkozy | Front National:

6 Mandat de Nicolas Sarkozy : une explosion de la fraude sociale liée à une explosion de l’immigration | Front National:

7 Départ de Maxime Tandonnet : Nicolas Sarkozy se révèle en écartant de l Elysée son seul conseiller anti-immigration !:

8 Marine Le Pen s'adresse aux policiers, gendarmes et douaniers de France,sur la lutte contre l'immigration clandestine:

9 RT laprovence: Un élu quitte ses fonctions à l'UMP pour protester contre la "frilosité" de son parti. UMP rebélion immigration Luca

10 Guéant : des mots contre l’immigration de travail mais des actes pour la favoriser comme jamais ! fn2012

politician

1 jean marie le pen

2 marine le pen

3 guéant

4 nicolas bay

5 sarkozy

6 nicolas sarkozy

7 nicolas sarkozy

8 marine le pen

9 luca

10 clerkOne thing we can try to keep the model from hallucinating is to add an additional prompt to instruct it to assign an NA whenever no politican is explicitly mentioned. We can do that with the additional_prompt() option (“argument”) within llm_extract():

start <- Sys.time()

tweets %>%

select(clean_text) %>%

slice_head(n = 10) %>%

llm_extract(clean_text, "French politician",

additional_prompt = "return NA if the tweet does not explicitly mention a widely known French politician",

pred_name = "politician") -> extracted

end <- Sys.time()

diff <- end-start

diffTime difference of 33.62775 secsextracted |>

select(clean_text, politician) clean_text

1 Dans cette vidéo, je dénonçais l'arnaque des bons sentiments en matière d'immigration

2 Je démonte les idées reçues sur l'immigration

3 A 15H aux questions d'actualités, j'interogerai M. Guéant sur les faits de délinquance liés à l'immigration clandestine venue de Tunisie

4 Nicolas Bay invité de « Objectif Elysée » en débat sur l’immigration | Front National:

5 Guéant reconnaît le bilan dramatique de la politique d’immigration de Sarkozy | Front National:

6 Mandat de Nicolas Sarkozy : une explosion de la fraude sociale liée à une explosion de l’immigration | Front National:

7 Départ de Maxime Tandonnet : Nicolas Sarkozy se révèle en écartant de l Elysée son seul conseiller anti-immigration !:

8 Marine Le Pen s'adresse aux policiers, gendarmes et douaniers de France,sur la lutte contre l'immigration clandestine:

9 RT laprovence: Un élu quitte ses fonctions à l'UMP pour protester contre la "frilosité" de son parti. UMP rebélion immigration Luca

10 Guéant : des mots contre l’immigration de travail mais des actes pour la favoriser comme jamais ! fn2012

politician

1 NA

2 NA

3 brice guéant

4 nicolas bay

5 sarkozy

6 nicolas sarkozy

7 nicolas sarkozy

8 marine le pen

9 lucas graham

10 guéantThis result is better (the first two tweets are correctly given NAs), but there are still some issues with the information extracted from the latter tweets.

This goes to show that LLMs, even though they are powerful, are not perfect. So the advice by Grimmer and Steward (2013, 271) – “validate, validate, validate” – is still relevant.

One potential way to fix this could be to be even more specific in the additional prompt or to simply try out a different, perhaps more powerful and accurate model.

Conclusion

This post showed you how you can analyze political tweets using open-source large language models in R with the mall package and ollama. While LLMs themselves are of course not even remotely close to being “simple” models, mall and ollama make it quite simple to use them for research projects.

Overall, the model we used, llama3.1, performed reasonably well when it comes to identifying if tweets are about a specific topic or politician, but struggled with correctly extracting information from the tweets. The second issue is something you can come across, and it can make sense to try out different models to see which one produces the most convincing results. ollama offers you a wide variety of free-to-use models that you can play around with.

The mall package also provides a few more functions that do other relevant operations such as classifying pieces of text into groups depending on their contents (llm_classify()), extracting the tone or sentiment of a piece of text (llm_sentiment()), or also a custom request with llm_custom(). In my (very limited) experience, some of these functions work better with longer pieces of text such as a speech given by a politican. I found this to be especially the case with llm_classify().

Obviously, when you work with longer pieces of text, your analyses with LLMs can take a lot longer than a few simple analyses with short tweets – so plan accordingly, and make sure to always test analyses on subset of your dataset to see if you get sensible results before you run them on the entire dataset (and potentially waste a lot of time).

If you’re now very motivated to do some analysis of your own but wondering where you could find relevant text data, you are in luck: Erik Gahner’s “Dataset of Political Datasets” includes a list of datasets of political speeches from different countries and international institutions (https://github.com/erikgahner/PolData?tab=readme-ov-file#political-speeches-and-debates).

Before you get started with one of these larger datasets, it can also make sense to learn a bit more about how to handle text data in R so that you are prepared in case you need to do any data cleaning or management. The article by Welbers et al. (2017), chapter 13 in Urdinez and Cruz (2020), or the book on text analysis by Silge and Robinson (2017) are good places to start.5

References

Footnotes

You might notice that things can take a lot longer when you run an LLM on your laptop compared to when you use OpenAI’s servers, and that the results are not always as good as the ones you get from ChatGPT. This goes to show just how much there is happening behind the curtains when you use ChatGPT or Copilot.↩︎

See https://github.com/ollama/ollama for an overview over the basic commands for

ollama.↩︎I also specify a “seed” number to make my analysis reproducible on my end. This is not strictly necessary (see here for an explanation: https://mlverse.github.io/mall/articles/caching.html).↩︎

The model sometimes returns “invalid output” that is stored as a missing value (

NA). If this is a persistent and significant problem in your own analysis, you might have to check the quality of your text data and consider modifying your prompt (see also further below).↩︎See also https://www.tidytextmining.com/.↩︎

Citation

@online{knotz2025,

author = {Knotz, Carlo},

title = {Using {LLMs} to Work with {Twitter} Data},

date = {2025-09-10},

url = {https://cknotz.github.io/getstuffdone_blog/posts/llms/},

langid = {en}

}